SEO Speed Humps

UPDATE: Video transcript available for ‘SEO Speed Humps’

I’ve been back “on the tools” recently as I record our Bloggers SEO System. I’m working on a bunch of different sites to demonstrate how to configure wordpress for the best possible ranking as well as write content that ranks well. Yesterday I was recording a module about site speed and I noticed something I hadn’t seen before.

Page Speed & Load Times Are Different

It seems like a simple thing but these two are very different metrics. Inside Google Search Console we are always looking at the “crawl rate” metrics. A slow site as we know will kill your rankings.

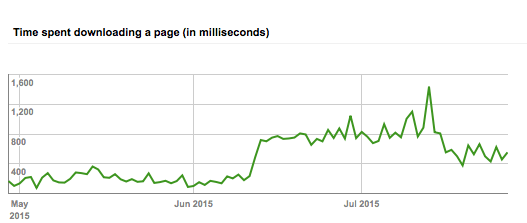

The above graph is something we are constantly looking at for all of our clients. The steep incline on the above graph is our client’s site getting hammered, probably like a DDOS attack, soon after they left their previous SEO company. What a coincidence eh? Anyway you can see it gradually coming back under control. It’s important to understand the what this graph represents. As the image says, its “time spent downloading a page” but it’s an average and it only measure what the Googlebot is actually downloading which is purely text not images. You really need to be faster than a 1 second on this graph as a general guide. When you get a spike in this graph it is usually server or site related not web page related.

Page Speed

The Google Page Speed Insights tool or Webpagetest are actually looking at web pages not the whole site. They highlight why a page is slow to load and what needs to be fixed. These can sometimes include server related issues like compression or caching not enabled.

My surprise

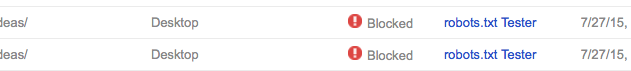

One of the sites I was looking at yesterday had a average load time in Google search console of close to 1 second. However individual pages on the site had incredibly poor scores on Page Speed Insights. In the case of one blog post 0/100! This was mainly due to massive file sizes on images. Once they were fixed page speed insights said 99/100 but then later reported 15/100. Google can be pretty flaky at times and yesterday was no exception. The same site had a badly configured robots.txt file. It had “Disallow:” with no path. Google (as it should) had pretty much ignored this non explicit directive. Yesterday I submitted a page to be crawled and got the error below.

When I clicked on the link that took me to the robots tester tool in Search Console it told be the bot was allowed. Then literally seconds later it told me it was disallowed! Google auto inserted a / into the robots directive. It saw something was missing in file and decided the whole site should be blocked. Weirdest thing. I quickly fixed the file and resubmitted my index request. I had never seen that happen before. I had already recording the show which is why that bit is not in it.

Faster equals higher rankings

One of the things Michelle the site owner did yesterday was go through and compress a lot of images using a WP plugin. Her site jumped 10 spots from page 3 to page 2 for one of her target phrases.

Jim’s been here for a while, you know who he is.