The New Google Search Console – What It Means For Your Website

UPDATE: As of 2018/09/04, Google’s pushed their Search Console out of Beta, with the new version now live and available to all users. It’s undergone some changes since this post, but the bulk of the content below is still relevant, just some names and locations of certain reports within Search Console may have changed.

The latest refresh to Google Search Console (which Jim covered in last week’s video) has been making it’s slow appearance in the form of a new beta version, which has been trickled out to current users. By trickled out, I mean we’ve got hundreds of websites and their different versions (HTTP, HTTPS etc) verified in our Search Console accounts, have the beta available for 8 of them – only half of which have usable data. So it’s still early days, however what we’re seeing is pretty exciting, especially as it’s confirming what we’ve known and have practiced for our clients for years.

The Beta Features

The latest Google Search Console edition has undergone a redesign, which has updated the interface to start reflecting the rest of Google’s properties – it’s sleeker, information is presented in a more user friendly way (Google clearly valuing user experience here, take notes) and it’s becoming more prominent. It’s now located at https://search.google.com/search-console, the branding is more established & the new tool is just crying out for attention. Google wants you to use this, if you have access to it, make sure you’re on it.

Status

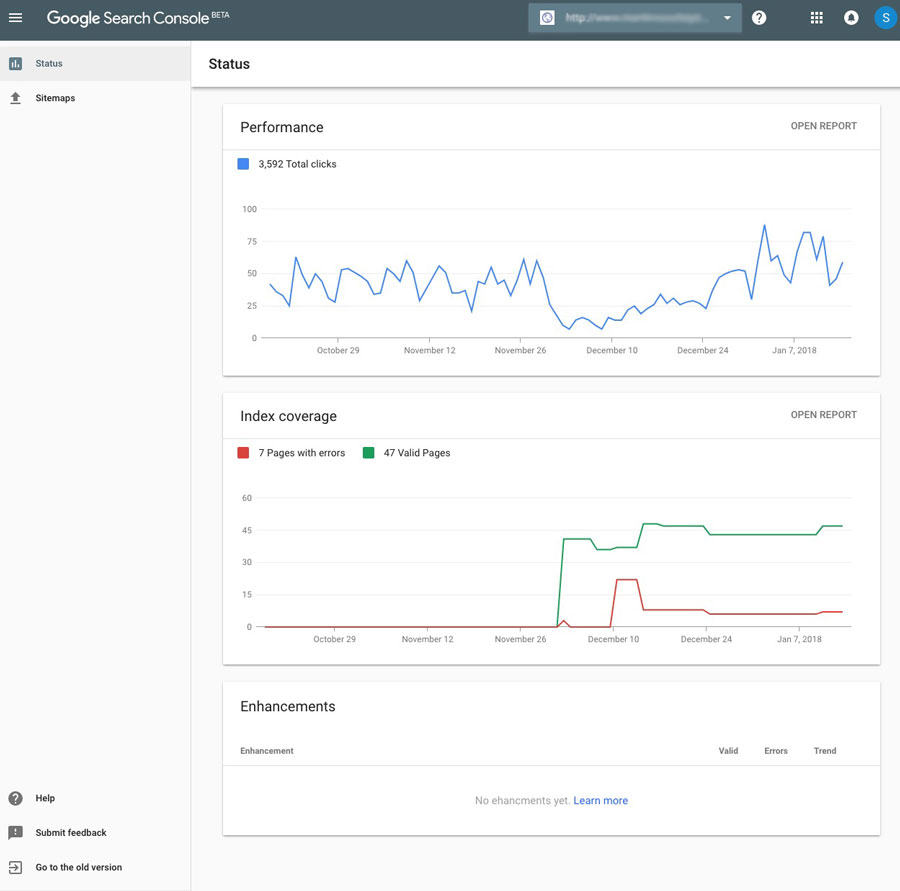

The first dashboard you’re presented with is the Status overview – a rundown of the Search Appearance, Index Status & a new, yet-to-be-filled segment dedicated to Enhancements.

3 month overviews for both the Performance and Index Coverage graphs are great for a quick, top-down update, and if required we can click through to the detailed reports for a more comprehensive rundown.

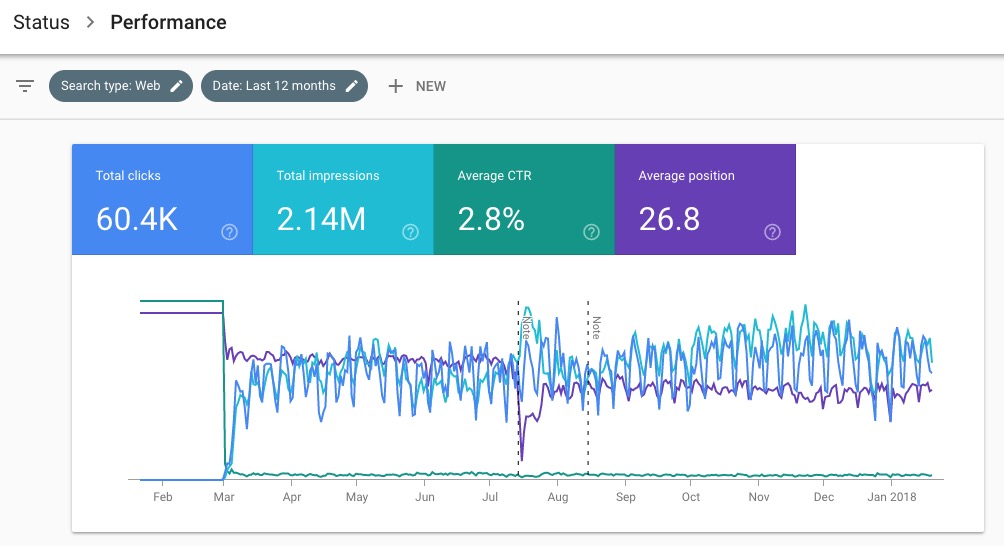

Performance

The Performance Report is essentially Search Analytics, just with more data available to you and a nicer interface. The previous maximum timeframe you could review data over was 90 days – we’re now given access to the “Full Duration”, which appears to be 16 months worth of history. This information is invaluable – we’ve hooked our client’s sites up to a tool which will store that data for us, so while we’re already storing more than this new limit, having it available directly from Google is fantastic for smaller site owners who don’t have the time to configure custom setups.

Other than the timeframe and design, there’s not much else new here. We appear to have lost the Difference column, to directly compare movements of queries over different periods, however all the information to calculate that yourself is still in plain sight. There’s still a max limit to the amount of queries, that being 1000, but other than that it just appears to be a prettier, more comprehensive version of the current Search Analytics section.

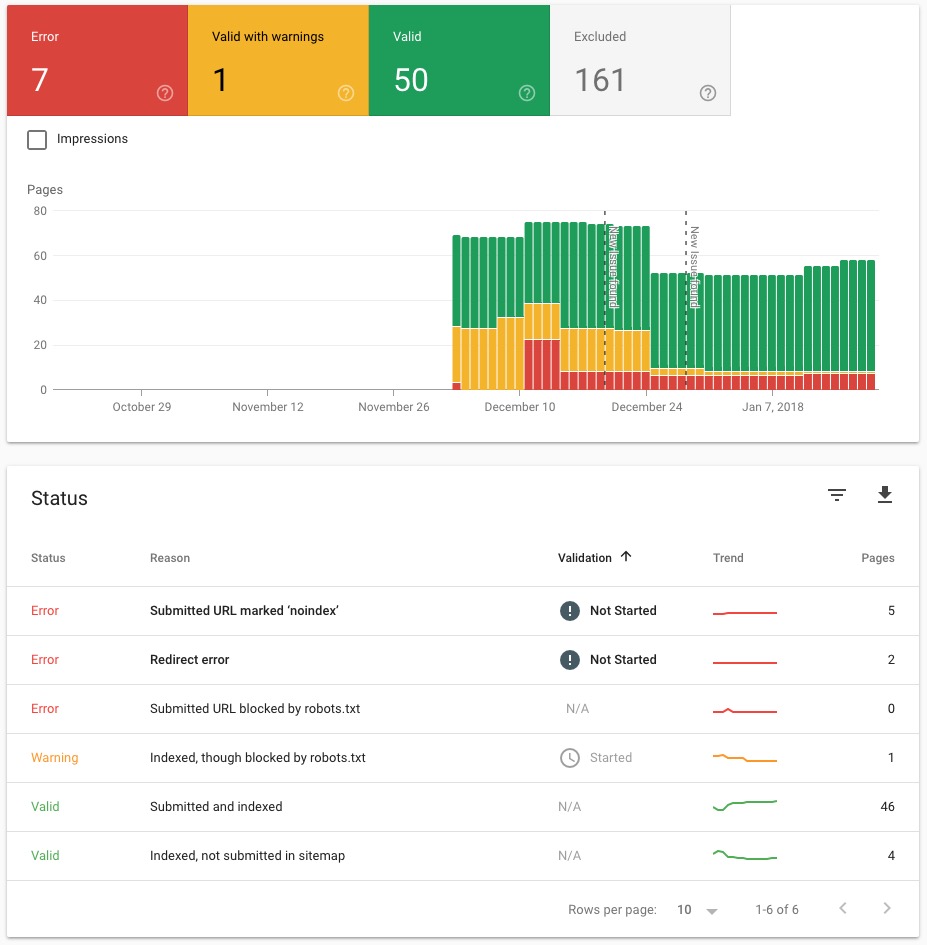

Index Coverage

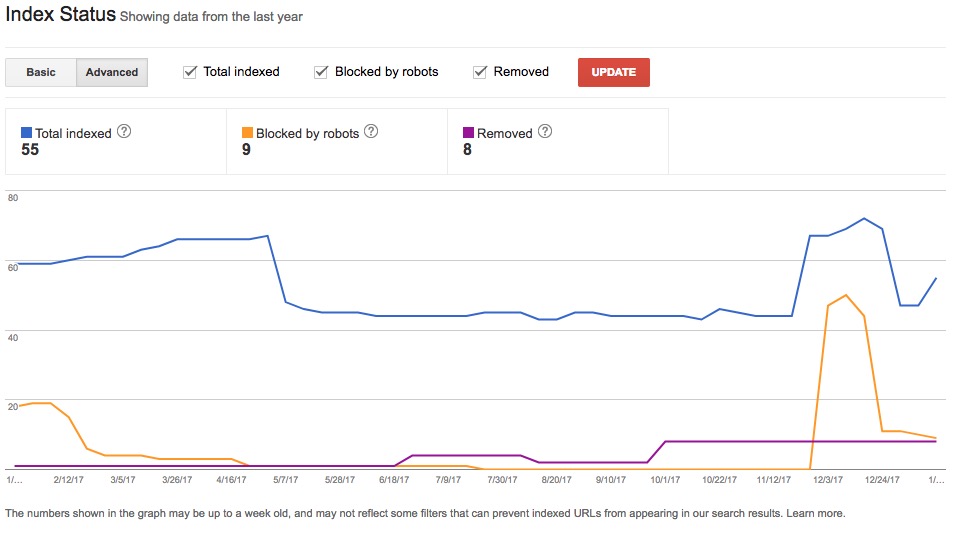

The Index Coverage report is a comprehensive refresh of the existing Google Index -> Index Status area. While the graph has been carried across, it’s presented very differently and alongside some much more valuable information, and appears to have brought the Crawl Errors section beneath it’s umbrella.

When you compare this to the existing version in the screenshot below, you’ll notice the quality of the information presented has improved quite a bit – instead of a 3 line graph, we now have a bar graph with 4 options, in unison with a breakdown of the Errors, Warnings, Valid and Excluded URLs. Keeping a clean index is about to become a lot easier.

The detailed information given to us by the Beta version is quite an improvement to the existing Search Console, with breakdowns for URLs with every status, from Errors, Warnings, Exclusions and even the Valid URLs. This extra detail provided will do wonders in keeping your Google Site Index clean and tidy, and if you want to rank well, you need to…

Keep It Clean

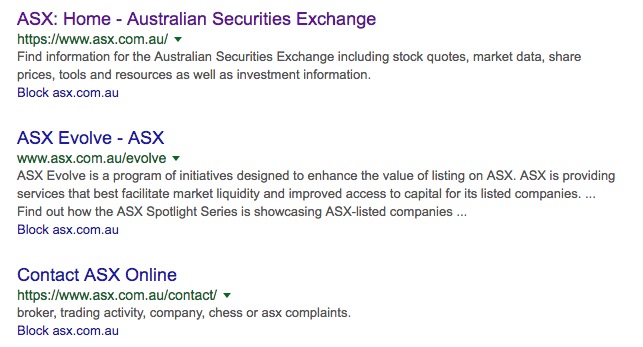

We’ve been harping on about the importance of keeping the Google Index clean for years now, and one of the first steps we take when bringing a new client on board is to assess the quality of their index, and if required (and in almost all cases, it usually is) we begin removing the low-quality pages. These pages we typically find through the “site:” search parameter – for example, a Google search for “site:asx.com.au” will return all the pages Google has crawled and indexed throughout their website over the years. Thanks to quite a few years in the industry, we’re able to identify some major indexing issues on this page within the first two results, however for site owners who don’t have this expertise under their belts and aren’t sure what to look for, identifying index errors can be tricky.

Thanks to this latest Search Console refresh, we’re now not only getting an in-depth breakdown of the URLs Google encounters that it believes are not worth indexing, but we’re clearly being told – by Google – that keeping your index clean should be a very high priority. Not only one of the first features to be brought across to the Beta, but one that’s undergone an intensive overhaul to improve it’s value – if you haven’t been paying attention to your Index Status, now is the time to.

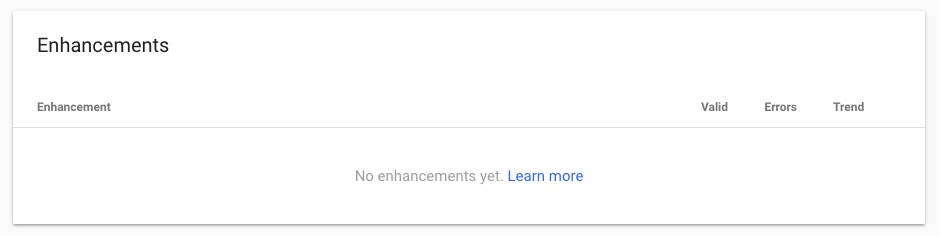

Enhancements

We’ve yet to see the Enhancements panel populated with information, however thanks to a support link within the section, we can get an idea of what will be coming in the near future.

Based on this support document, we can safely assume that the current Search Appearance section within Search Console will be consolidated and repurposed as Enhancements. Whether this will just be a run-down of the errors Google may encounter, similar to how the Structured Data section currently works, or if it will merge with the Data Highlighter to make it easier for site owners to markup their products, events, recipes, reviews etc, we’re unsure. With the Beta being redesigned with user experience as a major focus we’re likely to see this section undergo a similar overhaul as we’ve seen for the Index Status area, with a more in-depth breakdown of the errors, an overview of valuable information Google has been able to ascertain without the structured markup around it, and the ability to test and markup within Search Console, potentially meaning a move of the Structured Data Testing Tool into the Search Console Dashboard.

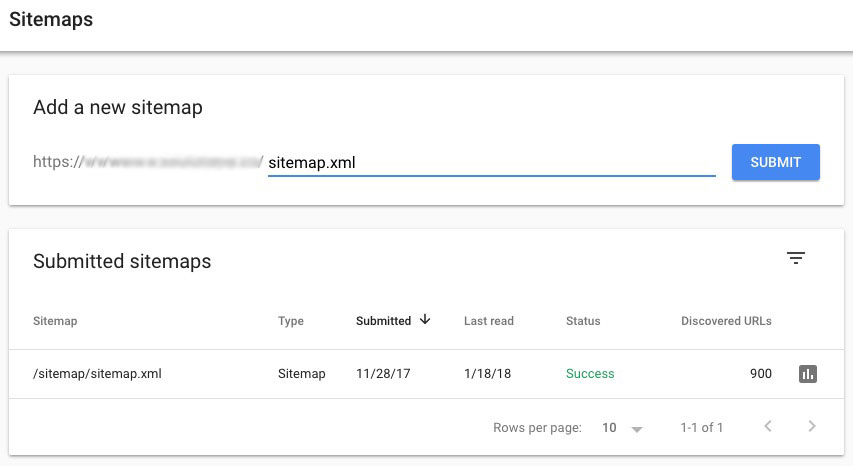

Sitemaps

Other than Status, the only other sidebar menu item we have to toy around with is the Sitemaps section, and there’s not many interesting or fun features to play around with here.

The only takeaway I have when looking at this is that sitemaps are now easier to add. Combine this with the fact that you can view the Index Coverage report at a sitemap level, and suddenly we have a method to test how Google interprets any list of URLs we throw at it. This will give Webmasters much more control and understanding of the index, and I believe it will lead to the Index Status becoming a more important ranking factor. We’ll be exploring the potential this new feature provides over the next few months, and how it can be used to speed up the index clean-up process, so keep an eye out for updates in our blog (or better yet, subscribe through the form to the right and get the updates directly to your inbox).

Still To Come?

While providing some new and exciting information, the Search Console Beta is still very much a beta. There are quite a few existing features and tools that need to be ported across, and hopefully some other much needed updates to help Webmasters keep a clean, user-friendly website.

Existing Search Console Features

One of the major aspects missing from the new Search Console is Search Appearance. This is definitely something that will be ported across, likely through the previewed “Enhancements” area. If this is the case, we’re about to see it undergo a similar transformation to the Index Status area. More detail provided for Rich Snippets is definitely coming, but whether we’ll see HTML Improvements or the Data Highlighter come along, I’m sceptical of. HTML Improvements is essentially redundant now with the improved Index Coverage, and unless the Data Highlighter gets a significant makeover, I doubt we’ll be seeing it in it’s current form on the new Search Console.

The Wishlist

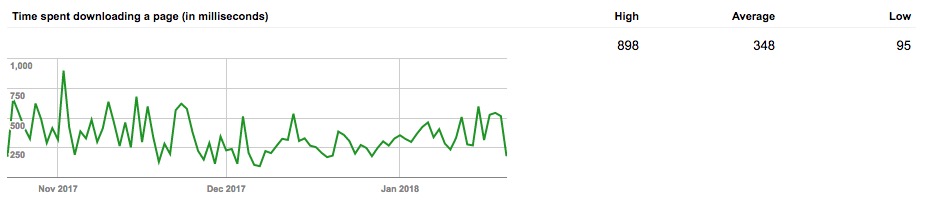

With Google’s push for “Mobile-First” hitting it’s stride this year, and the planned update to switch the primary index from the Desktop versions of websites to the Mobile version, the number one feature I’d like to see revamped and improved is the Crawl Stats, in particular time spent downloading the page. Site Speed has become a vital ranking factor, as well as instrumental in maintaining a good conversion rate, and with the only tool for measurement available within Search Console being the Time Spent Downloading Pages under Crawl Stats, it feels like it’s been neglected.

Bringing the PageSpeed Insights tool into the Search Console fold isn’t something that will likely happen upon the Beta’s full launch, however I do foresee it being bundled into the Status > Index Coverage report sometime down the track, providing a rundown of each URL detected and how it performs.

Where’s This Taking Search?

Breaking down the beta, the overall theme to the update appears to be focused around the UX – creating an easier to use tool for the less tech-savvy among us, as well as the updates focusing on the quality of results within the index, which will lead to improvements to search results thanks to tidier and easier to assess websites.

This update is also coinciding with some interesting developments to the indexes of sites we keep track of. When doing a “site:” search, we’re starting to see URLs from different domains appear in what has traditionally been a search parameter reserved for listing the index of the domain entered. This could be a signal that Google is paying more attention to content referred to on your website, and treats it equally, as if it’s been uploaded directly to your website. So far, instances of this have been limited to resources – PDFs, Word documents, uploaded at one location but linked to from a related website. We’ve mostly seen it in the financial sector, with investor updates from the ASX appearing in searches under different corporation’s domains, however it’s also occurring with common brochures & product information documents from suppliers on distributors & retail sites.

Should this change your approach? I’d only be concerned about this happening if the document was also being hosted on the main domain, leading to potential duplicate content issues, though they’d be minor unless PDF rankings are important to your business. With little control over the other domain’s document, a close eye will need to be kept on it to ensure it isn’t removed and you’re left with a 404 linked on site as well as now indexed.

Overall, I’m excited to see the fully-finished Search Console update, however this appears to be awhile off and I wouldn’t be surprised to see a few more features added to the Beta before it’s launched. Keep a close eye on your inbox for the invitation from Google to join in on the beta – you will need to already have access to a verified Search Console property for this invite to be sent, if you’ve yet to setup SC, jump across to BloggersSEO and give our tutorial a squizz.

Have any features you’d like to see in the next iteration, or theories to where Google is leading us with these updates? Let us know in the comments, I’m eager to hear other peoples’ opinions on this.

Boasting over a decade in the digital marketing landscape, this seasoned consultant excels, not just in technical SEO, but in providing comprehensive digital marketing solutions. With a solid foundation in growing organic search traffic and supervising website migrations, he has broadened his expertise to cover all aspects of digital marketing. Combining his knack for balancing SEO needs with design and user expectations, his skills extend to enhancing sales pitches and delivering expert training. He’s your go-to strategist for a well-rounded digital marketing approach without compromising on user experience.